"I was more fascinated by the bigger picture"

From the Commodore 64 to supercomputers: Martin Schultz has witnessed the development of data science firsthand. Today, he is researching how artificial intelligence is changing our understanding of weather and climate at Forschungszentrum Jülich. A conversation about scientific breakthroughs and interdisciplinary teams.

How did your path into data science develop – and what particularly fascinated you about weather and climate data?

I studied physics and earned my diploma in solid-state physics. After that, I actually wanted to go into consulting, but I kept hearing the same advice: “You should probably get a PhD first.” So I looked into that – but with a change in focus, because I had become very interested in environmental research. That’s how I eventually ended up in atmospheric science.

At the same time, I already had a great interest in computers – I was one of the early users of the Commodore 64. So, in atmospheric research, I increasingly found myself dealing with data-related problems.

During my postdoc at Harvard University, I mainly developed data analysis programs. At the time, this involved large aircraft measurement campaigns, where various data streams had to be combined. These came from different instruments, each with its own time resolution. It was therefore necessary to create a data format that made it possible to view and relate the various datasets together. From there, my path led into numerical modeling – first of weather processes, and later of atmospheric chemistry.

Initially, it was about transport processes, and then about chemical reactions, transformations, and similar phenomena. Afterward, I worked as a scientist – and quite soon also as a group leader – at the Max Planck Institute for Meteorology in Hamburg. There I was responsible for developing a chemistry module for the ECHAM climate model, turning it into a so-called chemistry-climate model. That work occupied me for several years.

Through that path, I eventually returned to Jülich, where I had also written my doctoral thesis. There I took over leadership of a group working on atmospheric chemistry modeling.

I was never the classic atmospheric chemist, focusing on individual reaction mechanisms in detail. I was more fascinated by the bigger picture – the entire Earth system. I was interested in how its various components interact, the roles of transport and mixing processes, and how all of that together influences the climate. And then, always the question: How can all of this be efficiently brought onto a computer? Over time, the calculations and models improved, but progress was slow. You’d spend ages fine-tuning code just to achieve the smallest improvements.

Our interview partner Martin Schultz

Martin Schultz

Martin Schultz heads the Earth System Data Exploration research group and is co-leader of the Large-Scale Data Science division at the Forschungszentrum Jülich. He is also a professor of Computational Earth System Science at the University of Cologne.

His primary research objective is the development and application of modern deep learning methods to Earth System Data, i.e. weather, air quality and climate data.

How did you then come to use machine learning and artificial intelligence in your research?

I had been interested in the topic early on, but the real breakthrough for me came in 2012 when I read a paper showing that artificial image recognition had become comparable to human performance. That was a moment of real clarity.

I thought these methods should also be applicable to weather phenomena and atmospheric processes. After all, weather forecasting often involves animated image sequences – not that different from a video. Shortly after, I submitted a proposal for an ERC Advanced Grant to apply AI methods to chemical processes in the atmosphere – and fortunately, it was approved. That became, so to speak, my seed funding to establish my own research group at Forschungszentrum Jülich focused on developing such AI approaches. That was in 2018.

From then on, things moved very quickly. By 2022, several models could produce weather forecasts at least as good as classical ones. That really woke up the entire community.

So, before the public was even talking much about AI, you were already working in that field. What were the biggest challenges back then?

At first, it was all about understanding the methodology: What’s actually going on there? How does it work? There’s a kind of threshold where machine learning moves from being a purely statistical method to something fundamentally new – an approach capable of groundbreaking results. That shift depends on model size as well as on new model concepts.

Understanding exactly what happens and how that transformation works was one of the big challenges early on. Another challenge was rethinking things from a process-oriented to a data-oriented perspective. I realized fairly quickly that many modeling problems had to be approached differently when using AI. In the natural sciences, you traditionally try to simplify a problem enough to fully understand it and express it in equations.

The AI approach is almost the opposite: the more data you have – even if imperfect or unstructured – the better the model can be trained, and the more insight you can gain.

I never would have thought I’d witness such a paradigm shift in my career.

So instead of thinking small, think big. AI has developed rapidly since then – what has changed the most for your work?

I never would have thought I’d witness such a paradigm shift in my career. Not just gradual improvement of existing methods, but a real rupture – that’s extraordinary. And being part of it at the forefront is a fantastic experience, I must say.

What has changed in daily work is, of course, collaboration. Until about ten years ago, I mainly worked with other meteorologists, atmospheric chemists, and related specialists. Since 2017, I’ve also been involved with the Supercomputing Center – which has brought more interaction with experts in computer architecture and high-performance computing.

What’s entirely new in recent years is the AI community – I had had almost no contact with it before. So I first had to build a network there. This is also reflected in the composition of my research group: nowadays we almost have the reverse situation from before. In the past, we were mostly domain scientists; today, the majority are computer scientists and engineers.

Many bring excellent machine learning expertise but are less familiar with atmospheric processes. So the challenge is finding the right balance: how much domain knowledge, how much machine-learning expertise – and also a good amount of engineering skill to implement the methods efficiently on high-performance computers. This mix makes the work demanding but also incredibly exciting, especially when building and leading such a group.

When you think about the coming years: what role will AI play in climate and weather research – and where do you see limits?

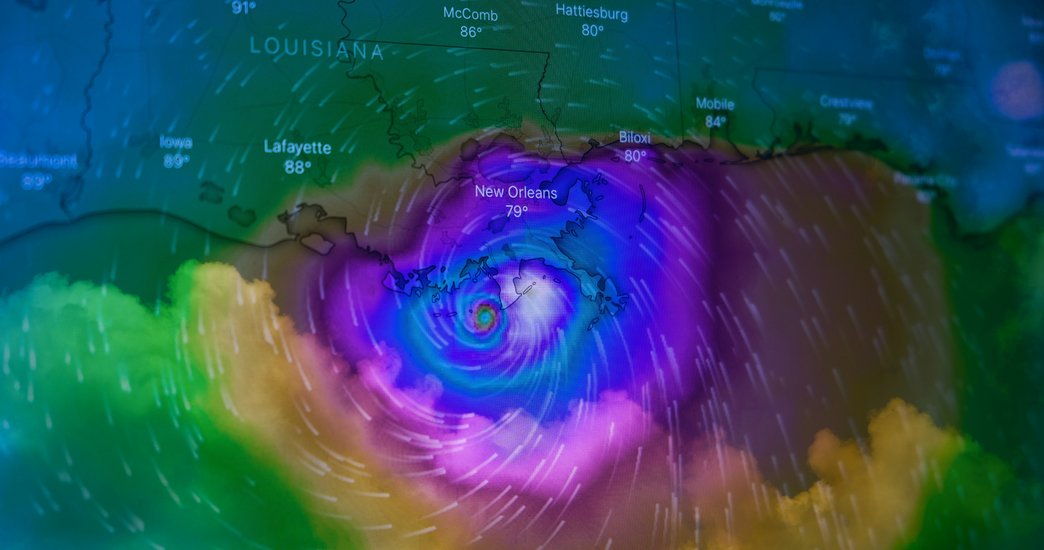

In weather research and forecasting, it’s very clear that AI now plays a central role. Many operational centers – such as the European Centre for Medium-Range Weather Forecasts or the German Weather Service – are among the international leaders. Especially in Europe, AI models are increasingly being used operationally.

Currently, the truly critical forecasts – those requiring the highest precision – still rely on classical numerical models. But AI models now run in parallel operations, are incorporated into evaluations, and increasingly complement traditional methods.

In climate research, it’s much more difficult. We’re now able to develop so-called climate simulators – AI models that mimic classical climate models. Of course, they also inherit the errors of those classical models. Still, it’s very useful because one can simulate many more scenarios and build large ensembles. It saves enormous computation time and often produces more detailed results – even if the underlying uncertainties remain.

In our Helmholtz Foundation Model Initiative project, we’re taking it a step further: exploring how to move beyond that boundary – toward AI models that can truly simulate the climate independently, not just reproduce existing models. There’s reason for hope: on the one hand, there are many data sources not yet fully exploited in classical modeling. On the other hand, we can try to combine strengths – for example, using traditional weather models to correct and adapt climate model forcing data with their errors to match real weather. If such corrections still work under changing climate conditions, we’d truly have created a better, more robust climate model.

Why are AI methods so much easier to apply in weather research than in climate research?

The main reason is that we have a much larger amount of observational data in weather research. There are also so-called reanalyses that have systematically and consistently simulated the weather over several decades – with astonishing quality. Of course, that’s not “the truth,” as some might think. There are errors in reanalyses too, but classical models come very close to reality that way.

That’s why AI models in weather forecasting can now match or even surpass classical models. In fact, there are now examples where AI models provide more stable and accurate forecasts over longer periods. Recent studies that directly integrate observational data into models also show that AI methods can make much better use of those data. In classical data assimilation, maybe ten to fifteen percent of available data are used – AI models can incorporate up to 75 or 80 percent. That leads to much more detailed information, which further improves weather forecasting.

In the climate system, the situation is entirely different. On long timescales, we have far fewer observations, and in some areas – like ocean depths or ice sheets – reliable measurements are extremely difficult. Many processes are also less well understood. Modeling spans enormous scale differences: from tiny cracks in ice to the breakup of entire glaciers. Such processes can still only be represented to a limited extent in numerical simulations. It’s impressive what’s possible today, but uncertainties remain large.

Additionally, the climate system consists of many interconnected processes – for example, the carbon cycle, energy balance, and moisture balance. Just think of thawing permafrost releasing methane. These processes operate on very different timescales – from hours to centuries. This coupling of timescales is something current AI models cannot capture – and I’m not aware of any architecture that can. There are first ideas about how this might one day be possible, but we’re still far from that. That’s what makes it so exciting: it’s a type of problem that doesn’t exist in the same way in other domains, like large language models.

It also means there isn’t a large AI community yet working on these specific challenges. Weather modeling was different – there, many were tackling similar issues. Now it’s a whole new kind of challenge.

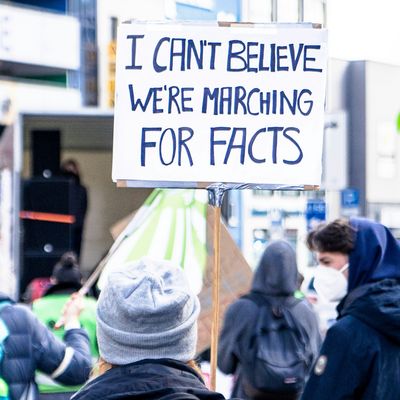

You shouldn’t focus solely on models and methods but cultivate a real curiosity and understanding of data.

What advice would you give to young people interested in data science who want to contribute to climate research?

Well, first of all, my take on data science: I actually think the term “data science” is already somewhat going out of fashion – in the sense that what is classically meant by it – applying statistical methods to data, curating, collecting, and analyzing them – now only covers a subset of the field. Machine learning has, in many ways, branched off from that.

The methods and techniques used in machine learning today are often quite different – they’re rarely taught in traditional data science courses. Even universities now have separate lectures and degree programs for them.

And I would really advise everyone to engage deeply with machine learning. I see an enormous future there – honestly, more than in classical data-science methods. Of course, much of the traditional work remains essential: even in machine learning, about 90 percent of the work still goes into data preparation, and maybe 10 percent into modeling. That means an interest in data is crucial.

You shouldn’t focus solely on models and methods but cultivate a real curiosity and understanding of data. From my experience, it also pays to focus early – not necessarily for life, but at least for a few years. Concentrate on one type of data and get to know it deeply. In climate research, that could be satellite data or data from climate models – or even more specifically, ocean, land, or atmospheric data.

The key is to develop your own perspective and expertise – to know the potential and limitations of your data. Then you can develop and apply methods purposefully. I think that’s the most meaningful long-term approach.